Gamma, Erlang, Chi-square distributions... all the same beast

June 09, 2021 19 min read

Probably the most important distribution in the whole field of mathematical statistics is Gamma distribution. Its special cases arise in various branches of mathematics under different names - e.g. Erlang or Chi-square (and Weibull distribution is also strongly related) - but essentially are the same family of distribution, and this post is supposed to provide some intuition about them.

I’ll first explain how Gamma distribution relates to Poisson distribution using a practical example of calculation of a distributed file system crash probability. After that I’ll describe the special cases of Gamma distribution, providing statements of the problems, in which they occur.

Gamma and Poisson distributions: same process, different questions

Suppose you have an array of inexpensive unreliable hard drives like WD Caviar Green with probability of failure of each individual hard drive at any day of .

You are planning to build a distributed file system with 1000 disks like these, and you need to decide, whether you will use RAID5 or RAID6 array for it. RAID5 allows for a failure of 1 disk (so if 2 disks crash at the same day, your cluster is dead), while RAID6 allows for a simultaneous failure of 2 disks (so if 3 disks crash at the same day, your cluster is dead).

Poisson distribution

Let us calculate the probability of a failure of k=3 drives, given N=1000 disks total. This probability is approximated by Poisson distribution, which we will derive from binomial distribution:

, where

First, let’s replace p with , where Lambda is a parameter of Poisson distribution. So, we get:

We can apply some assumptions now. We know that k << N and p is small.

Now, let’s recall that and neglect as it is close to 1. So we get:

.

Next let’s also neglect the difference between and , so that and we can cancel it out, so that we get the final form of Poisson distribution:

Hence, our RAID6 will go south due to a simultaneous failure of 3 hard drives in one day with probability: .

Seems small, but probability of this happening in 5 years is around 25%: . So the described cluster isn’t really viable.

Gamma distribution

We’ve already found out that 1000 Caviar Green HDDs is too much for RAID6. Now, we might want to ask a different (somewhat contrived in this case) question.

Suppose that we know that a RAID6 cluster went down due to a failure of 3 drives. What was the probability that there were 1000 (or 100 or 10000) hard drives in that cluster?

Note that in previous case the number of disks was a fixed parameter and number of crashes was a variable, and this time it’s the other way around: number of crashes is a fixed parameter, while the total number of disks is a variable. We’re solving a very similar problem, which the Bayesians would call conjugate.

can be interpreted as a stoppage time of a process until failures happen. For instance if you were dropping paratroopers one by one from a plane and each paratrooper had a probability of that his parachute doesn’t work, you might have asked yourself what would be the probability that you’d managed to drop 1000 troopers before the parachutes of 3 of them malfunctioned.

Let’s keep the notation, and show that the probability in question is described by Gamma distribution.

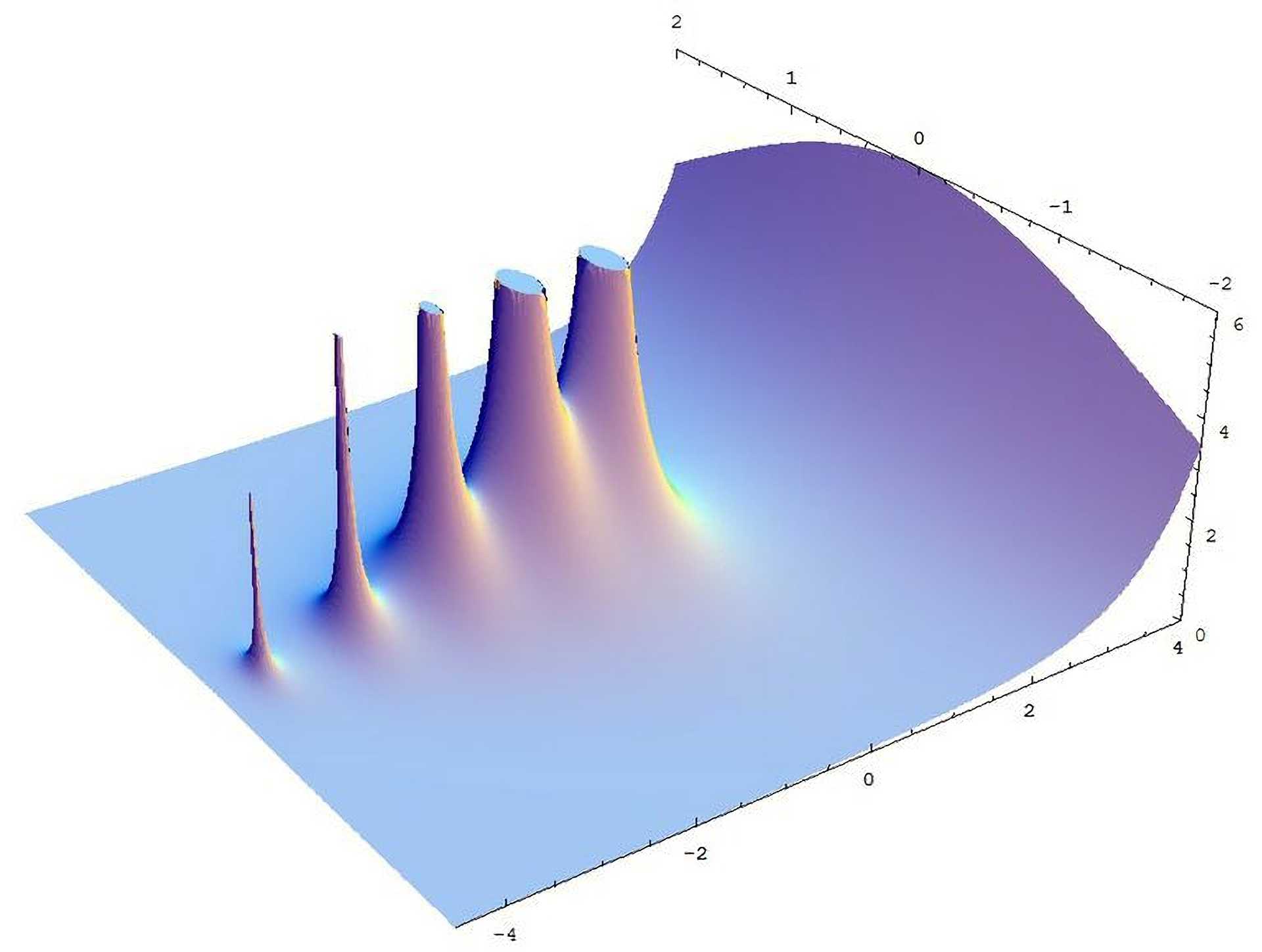

.

Now, recall that gamma function is defined as , which suspiciously reminds the denominator of the probability above.

Let’s change the notation , so that , and we almost get , which is very close to the definition of Gamma distribution.

I wasn’t accurate here with the transition from a sum to an integral, with sum/integral limits and with multiplier; however, it gives you an idea of what’s going on.

So, to make this argument more rigorous, instead of discrete values of , corresponding to the total number of hard drives or paratroopers, I’ll transition to a continuous real-valued (you may interpret it as continuous time).

As we know the cumulative probability function of our Gamma process from its Poisson counterpart , we can infer probability density function from it. I’ll be following the general logic of this Aerin Kim’s post.

.

From Bayesian standpoint what we did here in order to calculate the Gamma distribution was , where is Poisson-distributed in case is a small constant. Gamma distributions is called conjugate prior for Poisson. Bayesian approach might be harmful for you sanity, however, as there is no way to rationalize priors for p(N) and p(n) (assumed equal here).

Special cases of Gamma distribution

Erlang distribution

Erlang distribution is a special case of basically the same Gamma distribution that arises in mass service theory, with a special condition that k is an integer number (as in case we considered above).

Agner Krarup Erlang was a Danish mathematician and engineer, who used to work on mathematical models of reliability of service in the early days of telephony in the 1900-1910s. He was studying the probability of denial of service or poor quality of service for in case of a telephone network of limited bandwidth. He actually came up with multiple models, which I won’t consider in full here, but they are nicely described in this paper by Hideaki Takagi.

Suppose that you join a queue with 1 cashier, where k people are ahead of you. The time that is takes the cashier to service a single person is described by an exponential distribution, so that probability density function that describes the chance that servicing a single person takes time is: , and probability that the service would take time more than t equals . What is the probability that you’ll be serviced in t minutes? This example is stolen from this post.

So, essentially we are dealing with a sum of independently identically distributed exponential variables, and as any sum of i.i.d. variables it will converge to normal distribution. But prior to that it will first converge to an Erlang/Gamma distribution.

To show that we’ll have to make use of convolutions. I prefer thinking of them in a discrete matrix form, but same logic can be applied in continuous case, replacing sum with integral.

What is the probability that service of 2 persons will take the cashier 5 minutes? It can be calculated as a sum of probabilities:

p(servicing 2 persons took 5 minutes) = p(person 1 took 1 minute) * p(person 2 took 4 minutes) + p(person 1 took 2 minutes) * p(person 2 took 3 minutes) + p(person 1 took 3 minutes) * p(person 2 took 2 minutes) + p(person 1 took 4 minutes) * p(person 2 took 1 minutes).

Or in a matrix notation:

Or to write it in a short formula:

Now, in continuous case instead of summing over the probabilities, we will be integrating over probability density functions (think of it as if your probability vectors and convolution matrices have become infinity-dimensional):

In case of , we get:

Now, if we recall that for integer , and , we’ve gotten a Gamma distribution from the previous paragraph.

Let us show that these formulae are correct by induction. First, let’s derive Erlang distribution with 1 degree of freedom:

Now let’s derive Erlang distribution with k+1 degrees of freedom from Erlang distribution with k degrees:

Chi-square distribution

Chi-square distribution is ubiquitous in mathematical statistics, especially, in its applications to medicine and biology.

The most classical situation where it arises is when we are looking at the distribution of a sum of squares of gaussian random variables:

, where

Probability density function of Chi-square with 1 degree of freedom

The easiest way to derive the distribution of chi-square is to take a Gaussian-distributed random variable and to show that is actually a Gamma-distributed random variable, very similar to Erlang distribution:

,

So if we recalled the pdf for Gamma distribution the threadbare with 1 degree of freedom is nothing more than a Gamma distribution with and .

It is also very close to the Erlang distribution , but for Erlang the power can be integer only, and in Chi-square it is , where is integer.

For reference, see this and this.

Probability density function for Chi-square with k degrees of freedom

Again, let us use the convolution formula:

.

Now we do a variable substitution , . Also note the corresponding change of integration upper limit to 1:

Our integral now is well-known as Beta function , another fun function, closely related to Gamma function. Integral of a Beta-function is a constant that can be expressed through Gamma function as .

Now, in general case -distribution with k+1 degrees of freedom is:

Let’s do the substitution again: , :

Notice that the expression under the integral is cumulative density function of Beta distribution, which equals 1.

Thus, .

A helpful link with this proof is here.

Weibull distribution

If you take a look at Weibull distribution PDF/CDF, you’ll figure out that it is NOT a special case of Gamma distribution, because in is taken to the power of m. However, it is a special case of Generalized Gamma Distribution family, so I decided to write about it here too.

Weibull distribution arises in the Extreme Value Theory and is known as Type III Extreme Value Distribution. There’s a theorem that states that a maximum of a set of i.i.d. normalized random variables can only converge to one of three types of distributions, and Weibull is one of them (the other two being Gumbel and Fréchet). Here is a nice introduction.

Waloddi Weibull was a Swedish engineer and mathematician, who started working in the field of strengths of materials, particle size upon grinding etc. in the 1930s and thoroughly described Weibull distribution in 1951, although its properties were studies much earlier by Frechet, Rosin & Rammler, von Mises, Fisher, Tippet and Gnedenko and others.

Unfortunately, the theoretical motivation for Weibull distribution does not exist, and the physical interpretation of k parameter is unclear. The only rationale that Weibull himself provides for the shape of his distribution is that is the simplest function, satisfying the condition of being positive, non-decreasing and vanishing at . I quote Weibull’s 1951 paper:

The objection has been stated that this distribution function has no theoretical basis. But in so far as the author understands, there are - with very few exceptions - the same objections against all other df, applied to real populations from natural biological fields, at .least in so far as the theoretical has anything to do with the population in question. Furthermore, it is utterly hopeless to expect a theoretical basis for distribution functions of random variables such as strength of materials or of machine parts or particle sizes, the “particles” being fly ash, Cyrtoideae, or even adult males, born in the British Isles.

Weibull distribution is often applied to the problem of strength of a chain. If you want to find out when a chain breaks, it breaks whenever any link of it breaks. If every link’s strength is Weibull-distributed, strength of the chain as a whole is Weibull-distributed, too. Let’s look at the cumulative distribution function.

Basically it says that p(chain link survives application of a force ) = . So, the whole chain of k links survives with probability , which is still Weibull-distributed.

Written by Boris Burkov who lives in Moscow, Russia, loves to take part in development of cutting-edge technologies, reflects on how the world works and admires the giants of the past. You can follow me in Telegram