Lagrange multipliers and duality

May 10, 2022 6 min read

Lagrange multipliers are ubiquitous in optimization theory and natural sciences, such as mechanics and statistical physics. Here I work out its intuition and derivation.

Constrained optimization

Lagrange multipliers in 2D case

Suppose that you need to find a maximum/minimum of a function , constrained on a condition .

Direct solution of such a system would be as follows: using condition we would express through as , substitute this into and find the optimum of in just variable, .

Lagrange multipliers provide a convenient alternative to this straightforward solution in the following form:

So, instead of substituting , we introduce a new variable and solve a system of 3 equations in 3 variables.

Why the solution of this new system is equivalent to the direct method?

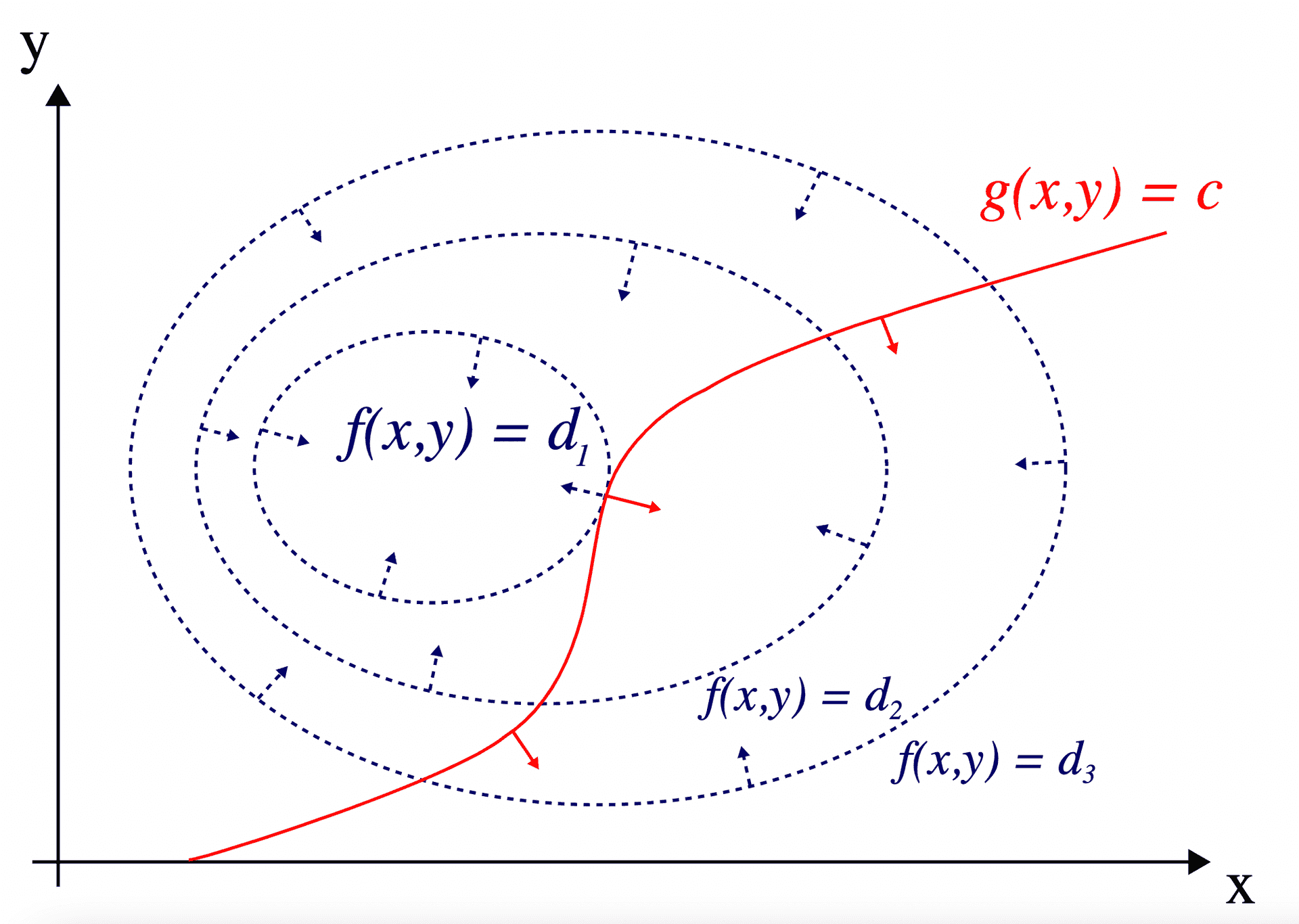

The interpretation of these conditions is as follows: let us draw the levels of .

At the point of curve, the gradient should be orthogonal to the curve , otherwise, it would have continued to move along the curve.

See illustration of 2D case:

Lagrange multipliers in 3D case

In 3D case similarly we optimize a function constrained on two conditions and .

In this case intersection of curves and is approximated by a line.

Again, should be orthogonal to this intersection line. See illustration in 3D case:

Duality and Lagrange dual problem

Instead of solving the original (or primal) constrained optimization problem, it is sometimes more convenient to solve a so-called dual problem.

If the original problem is a Karush-Kuhn-Tucker system:

We can instead try to solve an unconstrained optimization problem:

As Michel Bierlaire jokes if we offered an alpinist a reward for each meter of height he climbs within Alps, we will have to carefully control his location. Instead we can try to change the agreement: we allow him to go wherever he wants, but if he goes to Everest, he gets penalized at least by the difference of heights of Everest and Mont Blanc, so that the solution of our unconstrained optimization is no better than the solution of constrained optimization.

Dual function

Denote .

is called dual function.

Lemma. The dual function is not greater than the constrained minimum of f(x).

Denote the value of , where the minimum of Lagrangian is achieved (i.e. the dual function is sampled):

Denote the point , where the constrained minimum of is achieved.

Then:

Dual problem

Now, among all the dual functions let us find such that gives the tightest lower bound for our unconstrained minimization.

This problem is called dual problem. It gives a lower bound on the solution of the original problem.

Example: linear programming

Suppose that we have a primal linear programming problem:

This primal problem gives rise to the following dual problem:

Basically, we are aiming to find such multipliers for each of the linear equations in the original system, that while the constraints hold (which gives us 3 constraints for 3 variables, , and , the upper limit is as big as possible).

In this particular case there is obviously no single solution, as two conditions cut the space with 2 hyperplanes, their intersection is a line, and there is no upper limit to the linear programming problem.

However, if the system of constraints consisted of 3 equations, intersection of 3 hyperplanes would have consisted of a single highest point. And solutions of the primal and dual problems would’ve been identical

Weak duality and duality gap

If , and are not convex, the solutions of the primal problem and of the Lagrange dual problem diverge by a value, called duality gap.

Let us depict a weird coordinate system, where instead of x, we use and as variables. In that coordinate system if conditions are not convex, the area, delimited by conditions, is not convex as well. Then there is a gap between the primal optimization problem and the dual problem:

Convexity and strong duality

If the functions , and in the original problem were convex (as in linear programming), the solutions of the primal and dual problems are equivalent (this is called strong duality, which holds, if Slater condition is satisfied).

References:

- https://math.stackexchange.com/questions/2578903/lagrange-multipliers-tangency - good stack overflow post on Lagrange multipliers derivation

- https://www.eecis.udel.edu/~xwu/class/ELEG667/Lecture5.pdf - nice lecture on duality

- https://www.stat.cmu.edu/~ryantibs/convexopt-F15/lectures/13-dual-corres.pdf - a leacture on duality by Tibs junior

- https://en.wikipedia.org/wiki/Lagrange_multiplier - wikipedia on Lagrange multipliers

- https://en.wikipedia.org/wiki/Duality_(optimization) - wikipedia on duality

- https://en.wikipedia.org/wiki/Slater%27s_condition - Slater’s condition

- https://optimization.mccormick.northwestern.edu/index.php/Lagrangean_duality - example of economic interpretaiton of linear programming

- https://www.youtube.com/watch?v=4OifjG2kIJQ - proof of weak duality

- https://cims.nyu.edu/~cfgranda/pages/MTDS_spring19/notes/duality.pdf - great post on compressed sensing

Written by Boris Burkov who lives in Moscow, Russia, loves to take part in development of cutting-edge technologies, reflects on how the world works and admires the giants of the past. You can follow me in Telegram